Once I had storage vMotioned the test VM back (again, no downtime – good old ESX!) I ran some more Iometer tests and performance looked a lot better (see below) Worth noting that even with this fault the volume remained on-line just without the cache enabled so I was able to storage vMotion off all the VMs and delete and re-create the volume (this time I re-created it without any mirroring for maximum performance. So, from looking at the following screen Houston we have a problem – my SSD is showing as faulted (but no errors are recorded either) – so I need to investigate why (and hope it’s still under warranty, if it has actually failed this will be the 2nd time this SSD has been replaced!)Īttempts to manually online the disk return no error, but don’t work either so not entirely sure what happened there, I did have to shut down the box and move it so I re-seated all the connectors but it still wouldn’t let me re-enable the disk.

In my case the SSD c3d1 had no I/O at all – so if you click on the name of the volume shown in green (in my case it’s called “fast”) then you are shown the status of the physical disks. If you look in data management/Data Sets and then click on your volume you can see how much I/O is going to each individual disk in the volume.

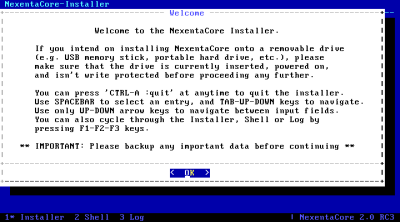

#Nexentastor install to usb drive how to

I encountered this problem in my lab – I have the following configuration physically installed on an HP Microserver for testing (I will probably put it into a VM later on however)ġ x 8Gb USB flash drive holding the boot OSĪnd the following configured into a single volume, accessed over NFSv3 (see this post for how to do that)Ĥ x 160Gb 7.2k RPM SATA disks for a raid volume in a raidz1 configurationĪ quick benchmark using IOmeter showed that it was being outperformed on all I/O types by my Iomega IX4-200d, which is odd, as my Nexentastor config should be using an SSD as cache for the volume making it faster than the IX4 So I decided to investigate.

0 kommentar(er)

0 kommentar(er)